What’s wrong with the pursuit of productivity with AI tools

Now we’re getting seductive messages about how AI can boost your productivity and be cheaper in the long distance. Every day, every hour another new AI automation tool or AI agent is ready to be added to your company workflow and their slogans are so interesting — “AI agent for making decisions, with our tool you can make best decisions for your business in minutes” or “You can build automation tests for your product in minutes and fire your entire in-house manual QA team”.

That’s all bollocks.

You have to understand preconditions and how to work with AI tools and their limitations. Using AI tools because everyone using it — it’s a failure path.

You need clarity before you need AI

First — you have to have a good understanding what you want to reach. You can’t use and implement AI tools blindly.

Better to have data and metrics to compare after starting to use AI tools. It’s huge. If you’re not sure about the benefits and you don’t have the data — as a first step you have to start collecting this data. It’s a cornerstone.

Without baseline metrics you’re just guessing. And guessing with expensive tools is still guessing.

Garbage in, garbage out — but faster

Second — requirements for quality of the data is absolutely huge. A good LLM engineer can’t boost your productivity or your employees’ productivity just by creating some pipelines with AI. It doesn’t work.

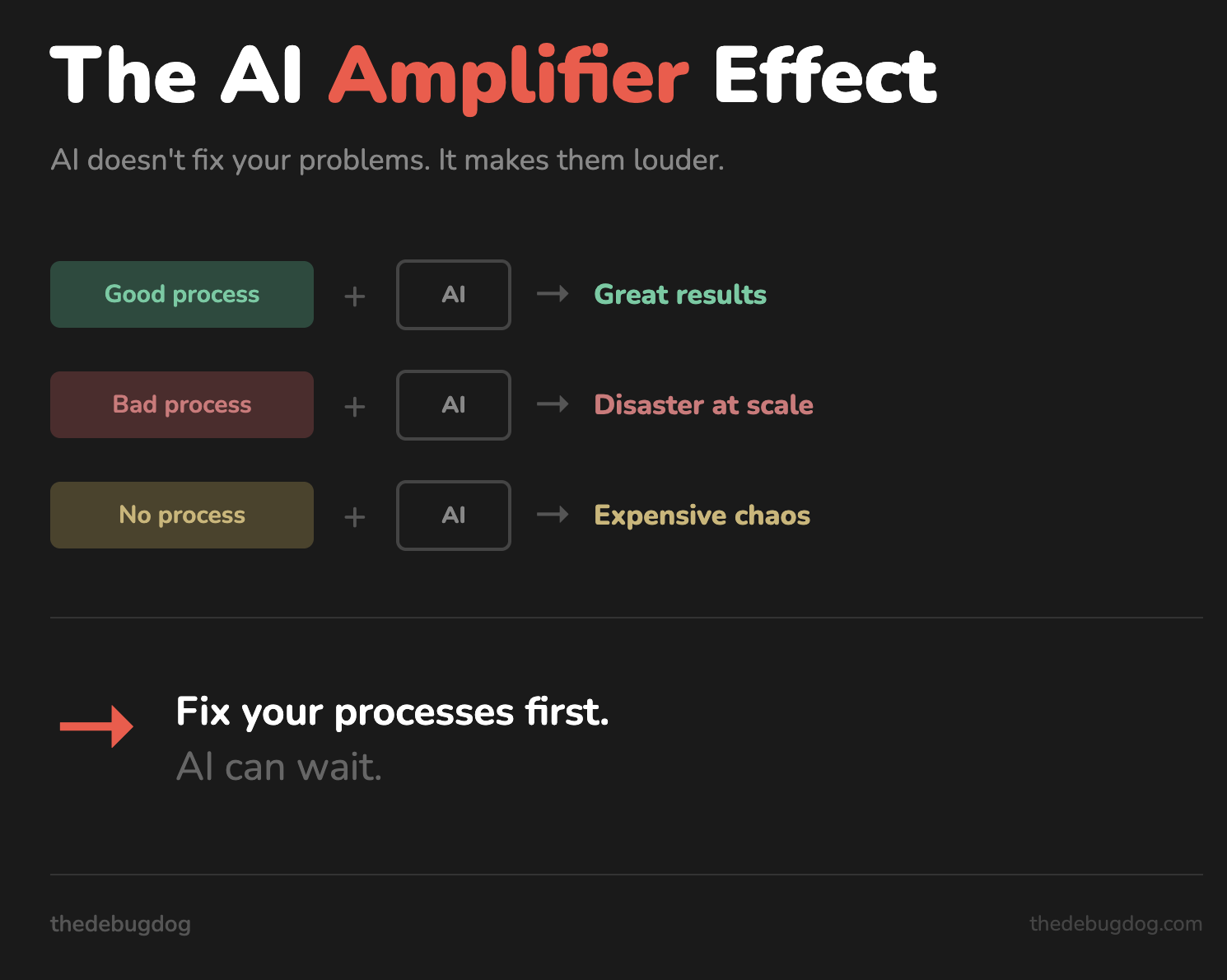

AI can help a good pipeline and process be more precise and productive. But if you have shitty processes and pipelines — probably it will make your processes and pipelines worse and more vulnerable.

Think about it this way: AI is an amplifier. It amplifies what you already have. Good processes become great. Bad processes become disasters at scale.

The AI tool graveyard

I’ve seen it dozens of times. Company buys a shiny AI tool. Marketing promises miracles. The team tries to implement it. Three months later, the tool is abandoned, money is wasted, team is frustrated.

Here’s a classic scenario from QA world. Company decides to implement an AI test generator. Sales guy shows beautiful demo — “look, it generates test cases automatically, you’ll save 70% of time”. Company buys it. QA team starts using it. And then reality hits:

- Generated tests don’t match actual user flows

- AI doesn’t understand business logic

- Team spends more time fixing generated tests than writing new ones

- Nobody documented the existing test strategy, so AI has nothing to learn from

Result? Tool subscription cancelled after 6 months. Money burned. Team demoralized. And the worst part — now everyone in the company thinks “AI doesn’t work” when the real problem was zero preparation.

This is the AI tool graveyard. Full of promising solutions that died because nobody asked the right questions before buying.

What AI actually does well (with conditions)

I’m not saying AI is useless. That would be stupid. AI works great — but only in specific conditions.

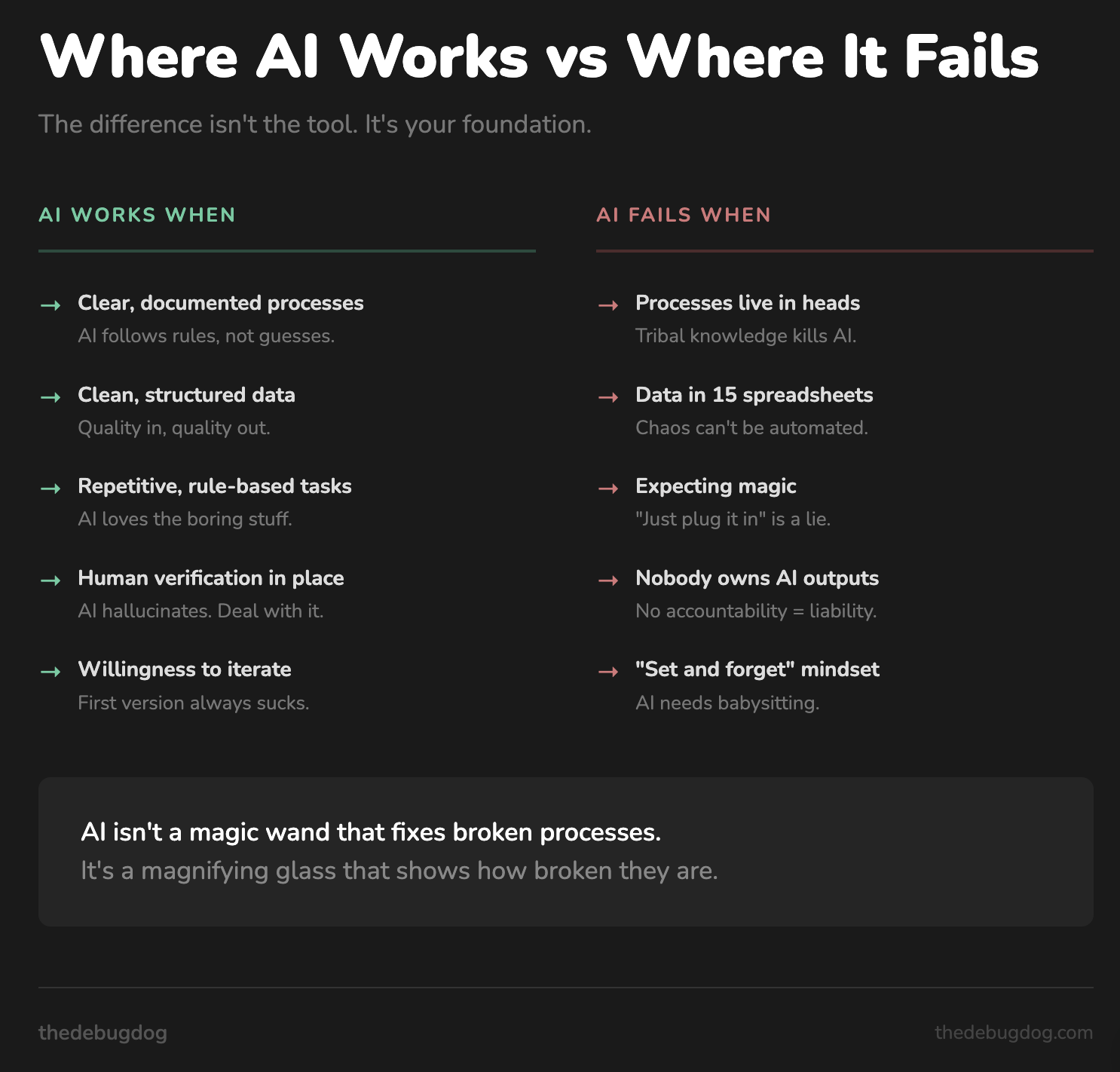

AI works when:

- You have clear, documented processes (AI can follow rules, not guess them)

- Your data is clean and structured (garbage in, garbage out — remember?)

- Task is repetitive and rule-based (AI loves boring stuff)

- You have humans to verify outputs (AI hallucinates, deal with it)

- You’re ready to iterate and adjust (first version always sucks)

AI fails when:

- Processes exist only in someone’s head

- Data is scattered across 15 different spreadsheets

- You expect magic without preparation

- Nobody takes responsibility for AI outputs

- You think “set and forget” is a strategy

The difference between success and failure is not the tool. It’s the foundation you build before using the tool.

The dangerous illusion: juniors + AI without supervision

Here’s a scenario I see more and more often. Company wants to save money. They hire junior specialists and give them AI tools. Logic seems simple — “AI will help them work like seniors, we’ll pay junior rates and get senior results”.

This is a trap. And it’s a dangerous one because it works… for a while.

Junior with AI tool can produce output fast. They generate code, write test cases, create documentation. Everything looks great on the surface. Managers are happy — look how productive our new hire is! We’re so smart, we saved money!

But here’s the problem. Junior doesn’t have domain knowledge to critically evaluate AI output. They don’t know what questions to ask. They don’t recognize when AI is confidently wrong. They accept hallucinations as facts because they don’t have experience to smell bullshit.

And AI is very good at sounding confident while being completely wrong.

So what happens? For weeks, maybe months, everything seems fine. Junior is shipping. Metrics look good. Then reality hits. Bugs in production. Security vulnerabilities. Test cases that don’t actually test anything meaningful. Documentation that describes how things should work, not how they actually work.

By the time you discover the damage, it’s already done. And fixing it costs way more than you “saved” by not hiring experienced people.

AI amplifies expertise. Senior with AI becomes super-senior. Junior with AI becomes faster at making mistakes. Without deep domain knowledge, you can’t verify AI outputs. And unverified AI outputs in production are ticking time bombs.

This doesn’t mean juniors shouldn’t use AI. It means:

- Juniors need proper supervision when using AI tools

- Someone with domain expertise must review AI-assisted work

- You need processes that catch AI hallucinations before they reach production

- “AI will compensate for lack of experience” is a lie that will cost you money

If you’re thinking “we’ll just hire cheap juniors and give them ChatGPT” — you’re setting yourself up for expensive lessons.

Where AI actually becomes a game changer

Okay, enough doom and gloom. Let me tell you where AI actually delivers.

Remember how I said AI amplifies what you already have? This works both ways. If you have good processes and experienced people — AI becomes ridiculous productivity multiplier.

Documentation and knowledge bases. This used to be the thing everyone hates. Nobody wants to update docs. Nobody wants to maintain knowledge bases. It’s boring, it takes time, and it’s always outdated by the time you finish writing it.

Now? With proper AI flow, you can keep your docs actually up to date. Meeting happened, decisions were made — AI drafts the updates, human reviews and approves. What used to take hours now takes minutes. And suddenly your knowledge base is not a graveyard of outdated information.

Here’s a scenario that used to be fantasy. Product team discusses conceptual changes. Big strategic stuff. In the old world — someone takes notes, then spends 3 hours turning those notes into proper vision document. Maybe it happens next week. Maybe never.

With AI in the loop: discussion happens, AI drafts the vision based on meeting context, product manager reviews and edits. 30 minutes instead of 3 hours. And this is at senior management level — people whose time is expensive.

Process documentation. Same story. Process changed? Instead of “we’ll update the docs later” (which means never), AI generates the draft immediately. Human reviews, approves, done. Your processes are actually documented and current.

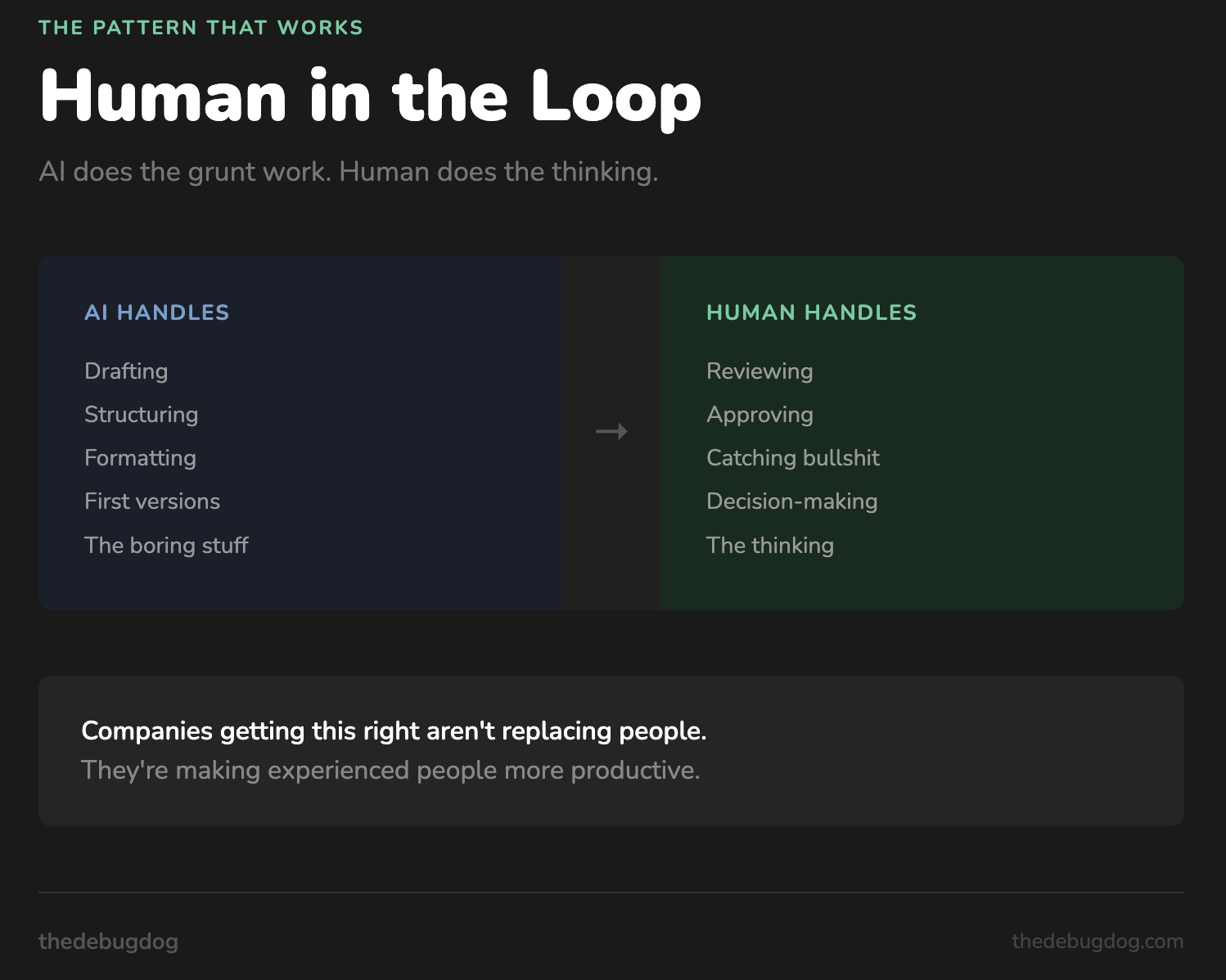

The magic ingredient: human in the loop.

This is critical. AI doesn’t replace decision-making. AI handles the grunt work — drafting, structuring, formatting, initial versions. Human handles the thinking — reviewing, approving, catching bullshit, making sure it actually makes sense.

Senior manager doesn’t write the vision doc from scratch. They review and refine what AI drafted. That’s a massive time save without sacrificing quality.

Experienced engineer doesn’t write boilerplate code. They review and improve what AI generated. That’s productivity gain with safety net.

QA lead doesn’t write test case descriptions from scratch. They review AI-generated cases and add the edge cases AI missed. Faster coverage, human expertise still in control.

This is the pattern that works:

- AI does the boring, time-consuming parts

- Human does the thinking, reviewing, decision-making

- Result: faster output, maintained quality, no hallucination disasters

The companies that get this right are not replacing people with AI. They’re making their experienced people more productive. And that’s where the real ROI is — not in firing your QA team and hoping AI will figure it out.

The real cost of AI tools

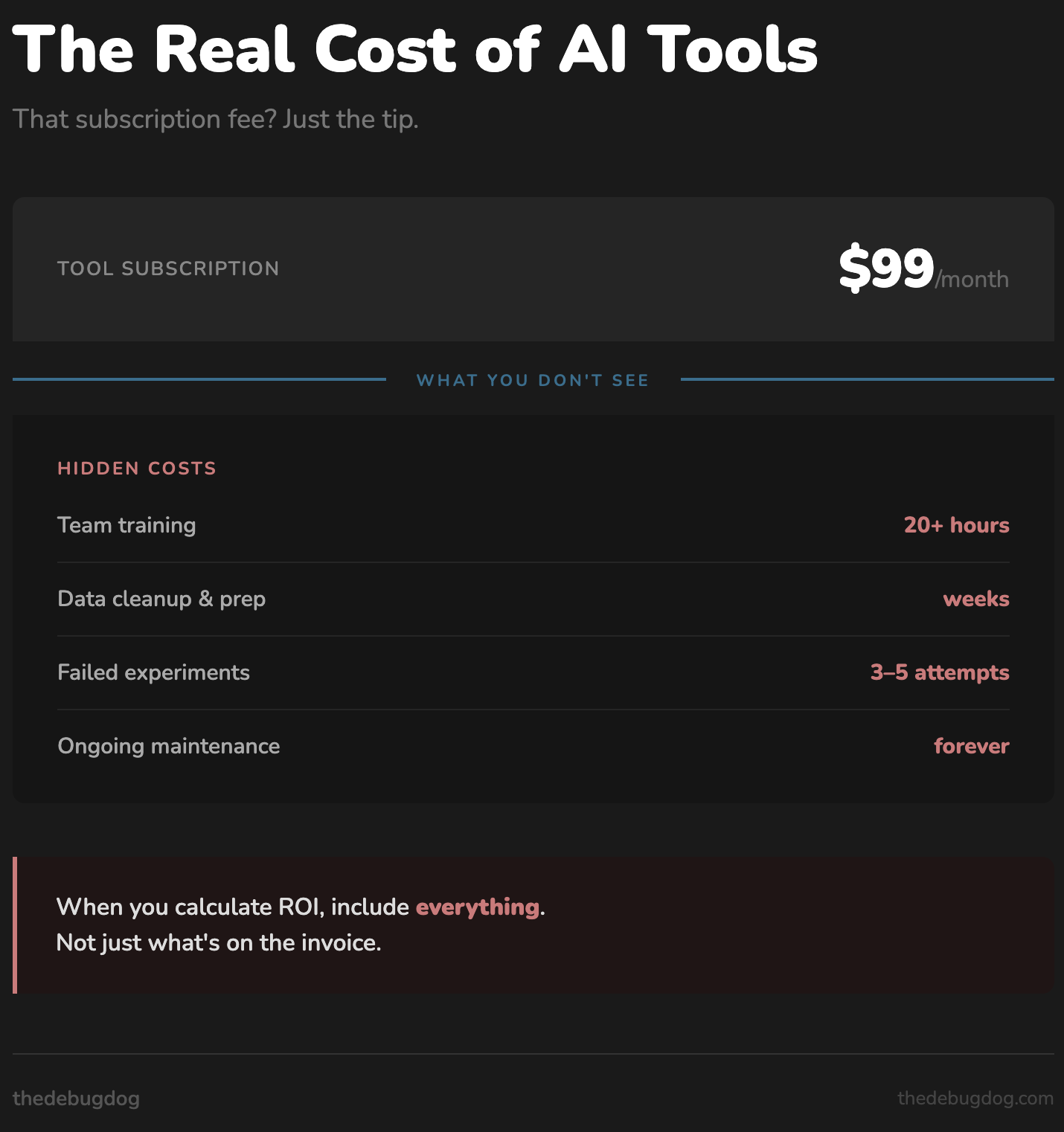

That $99/month subscription? It’s just the tip of the iceberg.

Here’s what’s hiding below the surface:

Visible cost: Tool subscription — $99/month

Hidden costs:

- Training time for team — 20+ hours

- Data preparation and cleanup — weeks of work

- Process documentation — if you don’t have it, you need it first

- Failed experiments — you will fail, multiple times

- Team resistance — people don’t like new tools forced on them

- Integration headaches — your current stack won’t cooperate easily

- Maintenance — AI pipelines need babysitting

When you calculate real ROI, include everything. Not just the subscription fee. Most companies don’t do this math. They see “$99/month” and think it’s cheap. It’s not cheap if you spend 200 hours preparing for it and another 100 hours fixing broken implementations.

Before you buy that AI tool

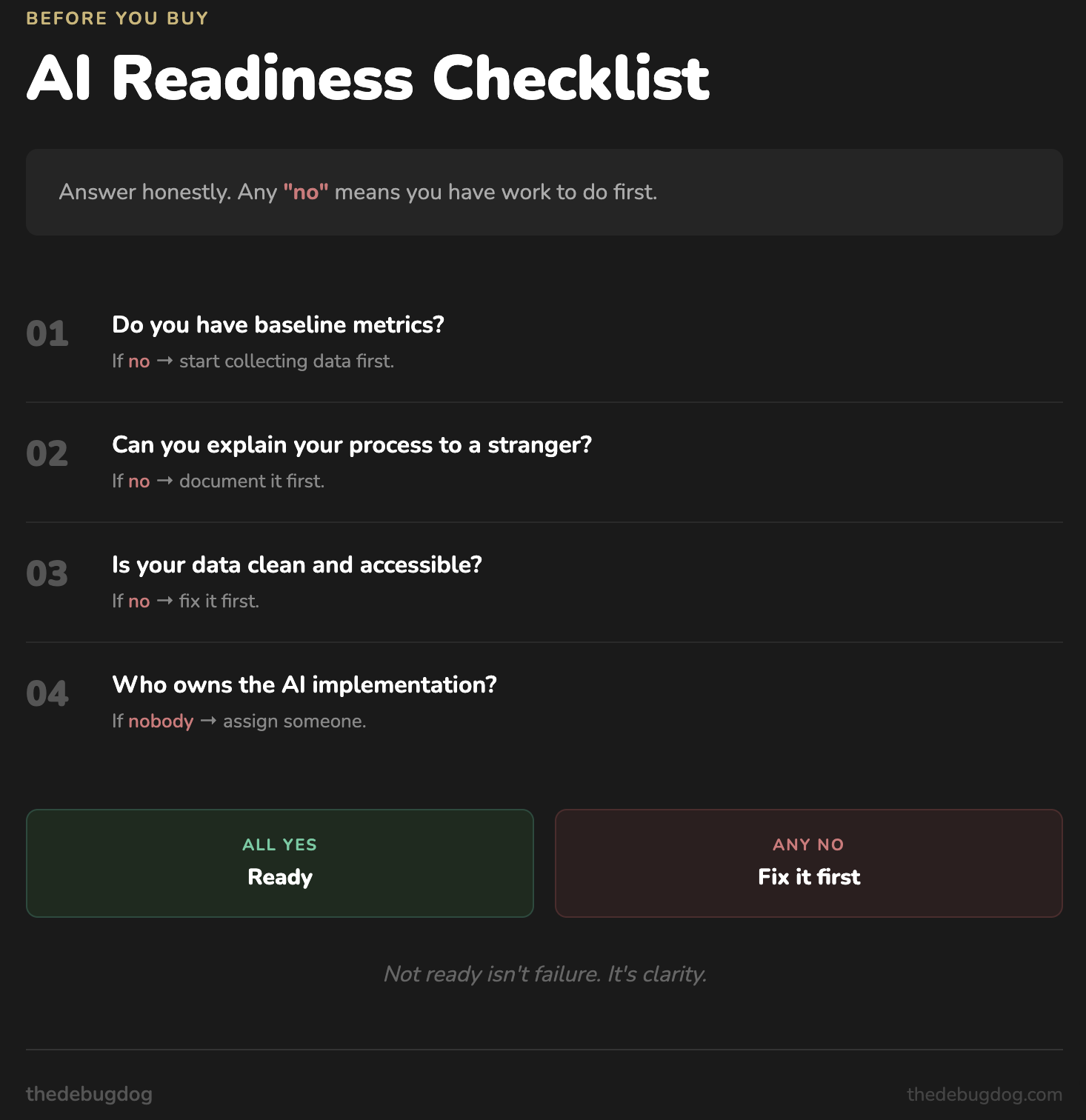

Stop. Before you add another AI tool to your stack, answer these questions honestly:

- Do I have baseline metrics? If no — start collecting data first. You need to know where you are before measuring improvement.

- Can I explain my current process to a stranger? If no — document it. If you can’t explain it to a human, AI won’t figure it out either.

- Is my data clean and accessible? If no — fix it. AI trained on mess produces mess.

- Who will own the AI implementation? If “everyone” or “no one” — assign someone. Shared responsibility means no responsibility.

- What happens when AI makes mistakes? If no answer — create a plan. AI will make mistakes. You need a human safety net.

- Am I ready to iterate for months? If no — don’t start. AI implementation is not a one-time project.

If you answered “no” to more than two questions — you’re not ready. And that’s okay. Better to know it now than after burning money and trust.

The real question

Everyone is asking “Which AI tool should we use?”

Wrong question.

The right question is: “Are our processes good enough to benefit from AI?”

For most companies, the honest answer is no. And that’s not a shame — it’s a starting point. Fix your processes first. Document everything. Clean your data. Train your team.

Then — and only then — start looking at AI tools.

AI can wait. Your broken processes can’t.

Comments ()